This blog is now hosted on codetrips.com (WordPress)

This post is best read there (with any updates, as I will add to it)

------------------

As I mentioned

earlier, testing on Android is not for the faint-hearted (or the man-in-a-hurry) - documentation is very thin on the ground (although I've recently seen appear a

few testing-related articles in the Developer documentation for the latest SDK - haven't checked them out yet, though) and the API is cumbersome at the best of times (and outright misleading, at the worst).

The "master class" to test an

Activity class

MyActivity, is

ActivityInstrumentationTestCase2<MyActivity> that instruments and initializes it.

Its documentation is marginally better than that for

android.test package:

A framework for writing Android test cases and suites.

but, hey, we haven't set the bar too high here.

Here are a few 'top tips' as how to avoid some of the grief, that I've discovered whilst developing unit tests for my

AndroidReceipts application:

Do not use the 'default' constructor, but the one that uses ActivityUnitTest(String)

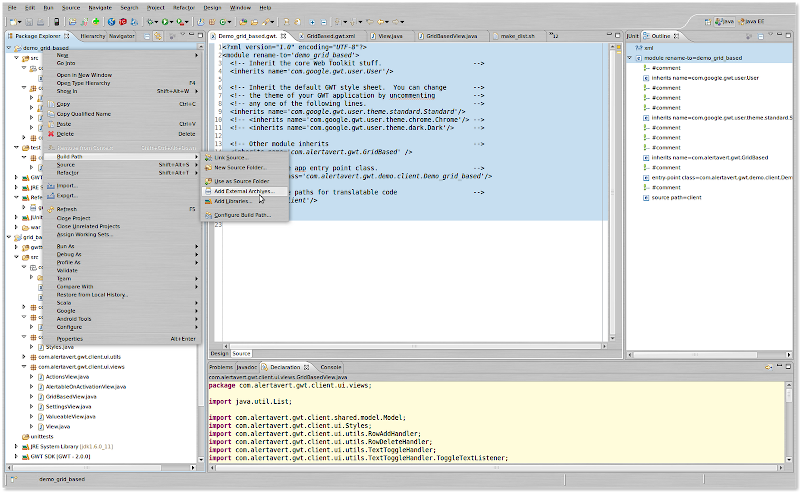

In Eclipse, you can right-click on a class in the project explores and then select

New/Other... to create a new

JUnit TestCase: in the ensuing dialog box, then select

ActivityInstrumentationTestCase2 as the 'super' class, the plugin will helpfully auto-generate the full class with the following constructor:

// DON'T DO THIS - it won't compile

public MyActivityTest(String name) {

super(name);

}

Here, Eclipse will (correctly) complain that there is no such thing as a ActivityInstrumentationTestCase2(String name) and you will quickly figure out that a (possible) super call may be something like this:

// DON'T DO THIS - it won't work

public MyActivityTest(String name) {

super(PKG_NAME, MyActivity.class);

}

This will, sadly, cause the test runner (

android.test.InstrumentationTestRunner) to happily ignore your test class:

[2010-06-06 22:33:20 - PolarisTest] Test run failed: Test run incomplete. Expected 51 tests, received 4

the trick is to add a call to

setName(name) so that the test runner will find your tests (

name, incidentally, is the name of the method being run).

// Do this instead:

public ScanActivityTest(String name) {

// NOTE -- API Level 8 have deprecated this constructor, and replaced with one that simply takes the Class<T> argument

super(PKG_NAME, MyActivity.class);

setName(name);

}

If you are using the SDK 2.2 (L8) version, then there appears to be a new constructor that only takes the name of the Activity's Class under test (while the constructor shown above is deprecated): I have not tried it out, and targetting L8 devices, at the moment, rather severely

restricts your target market.

Beware of Eclipse (ADB) missing a change in project source

The typical cycle is to write some code, run the tests, make changes, run the tests again.

This generally works, but, from time to time (I've been unable to discern a pattern) ADB misses a change in your source code and just re-runs the same tests as before.

As this typically happens when you make changes to the 'main' project (as opposed to the 'test' one) I suspect this happens when you do not save the modified source file, this in turn does

not trigger a re-build of the APK, which change would have been picked up by the deployer of the 'test' project.

Be that as it may, keep an eye on the Console view and check that the a new version of the APK for the 'main' or 'test' (or both) projects gets installed on the emulator.

If you 'remove' the app manually, you also must 'clean' the project

On the same token, at times it turns out that the only way to get ADT out of its own hole, is just to go into the Emulator's

Settings/Applications/Manage Applications and just remove either or both of the installed projects.

This will make the test runner deeply unhappy, and it will manifest its unhappiness by refusing to deploy the APKs and giving out an

[2010-06-06 19:28:31 - PolarisTest] Application already deployed. No need to reinstall.

The one way to quickly 'fix' this is to go into

Project/Clean... and clean one or both of the projects.

The default tearDown() calls on onPause() (but not onStop() and even less onDestroy())

Well, I was surprised to find that out - it would have been reasonable to expect the test fix to run through the whole

Activity lifecycle, and shut it down "gracefully.

In case you have some 'session management' (and who doesn't these days, in a serious Android app? you want to preserve state so that the user can come back to your app and find it exactly the way she left it) this may cause some surprising results when testing.

Considering that, in the Android process management system, there is no guarantee of a 'graceful shutdown' (essentially the scheduler wants to feel free to kill your proces without having to wait for your app to get itself sorted out -- and quite rightly so: we don't want a "Windows Experience" where some poorly-paid and even less-trained programmer can bring the whole system to its knees by sheer incompetence) this is just as well: in fact, the more I look into it, the more I find myself doing state management in the

onPause / onCreate / onRestart lifecycle methods, and essentially ignoring the

onStop and

onDestroy (in particular the latter, I wonder sometimes why it's there at all)

Whatever, words to the wise: your Activity's

onStop/onDestroy won't be called, unless you do it yourself.

Unit test run concurrently, but, apparently, @UiThreadTest prevents this for the UI thread

I must confess I'm not entirely clear about the full implications of using the

@UiThreadTest annotation (apart from ensuring that the tests will run sequentially in the UI thread, thus avoiding a predictable chaos if they were all allowed to try to access UI resources concurrently) but one fundamental implication of this is that

sendKeys cannot be called from within a test that is annotated with the @UiThreadTest.

Despite the documentation being conspicuosly silent about this

minor detail, it turns that it must

not be run in the UI thread; all we know about this method is that "

Sends a series of key events through instrumentation and waits for idle." - whatever that means, the bottom line is that it does not return and your test will eventually fail with a timeout exception.

From my limited experimentation, the only workaround is to either use it only in tests thare are annotated with something such as

@SmallTest or similar (but not

@UiThreadTest) or to make it run in a separate thread (just create a Runnable that will execute once you are sure the UI elements have been initialized - critically, the layout has been 'inflated').

And, on this topic...

Activity.findViewById(id) - only use after you have 'inflated' the View (typically, by calling the setContentView() on an R.layout.my_layout resource)

Nothing much to add here, really, just be careful about how you sequence your asserts, if you need to verify conditions on UI elements (typically,

Widgets) as the

findViewById will invariably return

null until the view is 'inflated' from the XML (if this is, indeed, the way you build your

View).

On a similar note, I've found the

Window.getCurrentFocus() (and similarly,

Activity.getCurrentFocus()) pretty much useless: naively, I thought that, once the Layout had been inflated, the 'focused window' would have been the main container (or some random widget therein: that would have worked for my tests): in fact, this call most invariably returns

null (unless, I presume, you

sendKeys to move the focus where you want it to be: this is rather cumbersome, in my opinion, and makes the tests rather brittle and too tightly coupled with the UI layout, which, in my book anyway, is A Bad Thing).

So, here is what I do instead:

@UiThreadTest

public void testOnDisplayReceipts() {

Receipt r = new Receipt();

instance.accept(r);

instance.onDisplayReceipts();

assertNotNull(instance.mGallery);

// verifies that the Gallery view has been 'inflated'

View gallery = instance.findViewById(R.id.gallery_layout);

assertNotNull(gallery);

}

Ok, it's not pretty, I'll give you that, but it works (and is rather independent of what I do in my Gallery view, what widgets are there and how they are arranged).

Beware of super.tearDown()

Worth to feature in one of

Bloch's Puzzlers

, what does this code do, when run with an InstrumentationTestRunner?

You may also want to know that the test passes, and, upon exiting from

testOnCreateSQLiteDatabase the

stub field contains a valid

reference to the

SQLiteDatabase just created and opened in its

db package-visible field.

public class ReceiptsDbOpenHelperTest extends ActivityInstrumentationTestCase2 {

public ReceiptsDbOpenHelperTest(String name) {

super("com.google.android.applications.receiptscan", ScanActivity.class);

setName(name);

}

public static final String DB_NAME = "test_db";

ReceiptsDbOpenHelperStub stub;

protected void setUp() throws Exception {

super.setUp();

}

protected void tearDown() throws Exception {

super.tearDown();

if (stub != null) {

String path = stub.db.getPath();

Log.d("test", "Cleaning up " + path);

if (path != null) {

File dbFile = new File(path);

boolean wasDeleted = dbFile.delete();

Log.d("test", "Database was " + (wasDeleted ? "" : "not ") + "deleted");

}

}

}

/**

* Ignore the details, but this does "work as intended," opens the database and returns a reference

* to it in the db variable.

* The {@code stub} is a class derived from {@link ReceiptsDbOpenHelper} and simply gives us access to

* some protected / private fields

*/

public void testOnCreateSQLiteDatabase() {

stub = new ReceiptsDbOpenHelperStub(getActivity(), DB_NAME, null, 1);

SQLiteDatabase db = stub.getReadableDatabase();

assertNotNull(db);

assertTrue(stub.wasCreated);

assertEquals(stub.db, db);

}

}

Well, you'll be surprised to know that, our

tearDown() does absolutely nothing: after the call to

super.tearDown(),

stub is

null (one can check it out using a debugger session; at least, that's what I did: upon entering

ReceiptsDbOpenHelperTest.tearDown() stub is a perfectly valid reference, just after the call to

super.tearDown() it's a

null: apparently a call to

super.tearDown() on an

ActivityInstrumentationTestCase2<MyActivity> wipes out all the 'context-related' instance variables.

Yes, I was too.

The fix is, obviously, trivial: move the call to

super.tearDown() to the bottom of your

tearDown() (luckily, this is not a constructor, so there's no reason why not to).

Summary

There seems to be light at the end of the tunnel: some "official" documentation is starting to appear on Android.com, the SDK is (slowly) moving to be (marginally) more user-friendly, and writing (and running) unit tests is no longer as painful as it used to be in version 1.0.

However, one would have wished that it wouldn't have taken until release 2.2 of the SDK (and version 8 of the API) to get where we are now: for one thing, it's rather likely that Android will be the first (or main) programming platform that many kids will take up when starting to explore computing and software development - and whilst programming in Android is fun, productive and gives an immediate sense of accomplishment, I am concerned that, having given testing (and unit testing, in particular) such a back seat, the wrong lesson may be learnt by young computer scientists: namely, that testing (and coding for testing) is something that can be done another day, when we'll get on the next release...

It isn't - writing unit tests is

vital to write bug-free, solid and portable code; it also

encourages the design of clean APIs: there's nothing like writing a few tests to figure out that one's just written a cumbersome API that needs fixing: and the sooner one finds out, the better!

The 'fix' is rather simple: just download the JAR for Swing Desktop from here, and drop the swinglabs-0.8.0.jar file into the [sdk-install-dir]/tools/lib folder.

The 'fix' is rather simple: just download the JAR for Swing Desktop from here, and drop the swinglabs-0.8.0.jar file into the [sdk-install-dir]/tools/lib folder.